A guide to data modelling: Key principles and design considerations

Designing and implementing an effective information management system starts with creating a well-structured data model tailored to your project’s specific needs. A good data model ensures that your database is organized, efficient, and capable of supporting the flow of information crucial to your project's success.

The complexity of social issues makes it crucial to have a clear and well-defined data model, especially in fields like humanitarian and development work. These areas often involve multiple stakeholders, diverse data sources, and intricate relationships. A strong data model helps navigate these complexities by providing a structured framework for managing and analyzing data effectively.

In this article, we look at what a data model is, key considerations for data model design, and the fundamental principles and frameworks.

Additionally, you may find it beneficial to read our article on project-level monitoring and evaluation, which offers insights into effective data management practices, creating a valuable connection between the two topics.

You can also watch the webinar Fundamentals of data modelling in humanitarian and development contexts to learn more.

ActivityInfo can support your data model design and our Customer Success team can help you get set up for success, if needed. Do you wish to learn more? Never hesitate to contact us.

What is a data model?

A data model is a diagram that visually represents a conceptual framework for organizing, defining, and showing the relationships between data elements. It outlines data structures and their interactions, offering a systematic way to store, arrange, and access data. This visual method helps clarify complex connections between various data points, simplifying the design of efficient and well-structured databases. By ensuring consistency and transparency, a data model plays a key role in data management, guiding the development of reliable data systems and promoting effective data usage and retrieval.

There are several types of data models, each with its own unique structure and use cases. Understanding these different data models is crucial for selecting the right approach to data management.

The relational data model is the most widely used today. It organizes data into tables (also known as relations), where each table consists of rows and columns. The strength of the relational model lies in its use of keys to establish relationships between tables. This model supports complex queries and transactions. The relational database model is flexible, scalable and its query language is robust and efficient with large datasets.

Key consideration for data model design

- Data collection, organization, integrity, and analysis: A well-structured data model enhances your ability to efficiently store, retrieve, and manipulate data. By defining rules and constraints, it ensures data integrity and consistency. For example, establishing relationships between tables can simplify data collection by allowing you to focus on essential data for each entity. Additionally, the model facilitates analysis by outlining potential analytical approaches based on the relationships within the data.

- Security: Security is another vital consideration when designing a data model. Defining who needs access to specific data and why. This helps meet policy requirements and protects sensitive information. A clear security framework ensures that data access is aligned with organizational policies and compliance regulations.

- Scalability and documentation: A good data model serves as a blueprint for future growth, making it easier to expand and scale your data system. It provides a clear, structured representation of the data, which is crucial for ongoing documentation and for anyone who needs to understand the system as it evolves.

- Data integration: Most organizations rely on multiple data sources, which can create silos of information. A well-designed data model defines how different systems and data sources relate to one another, enabling smoother data integration and a more holistic view of the organization’s data landscape.

- Communication: A data model provides a common language for communication between different stakeholders, including data scientists, developers, and non-technical team members. This shared understanding ensures that everyone involved in the project can collaborate more effectively, making it easier to align objectives and expectations.

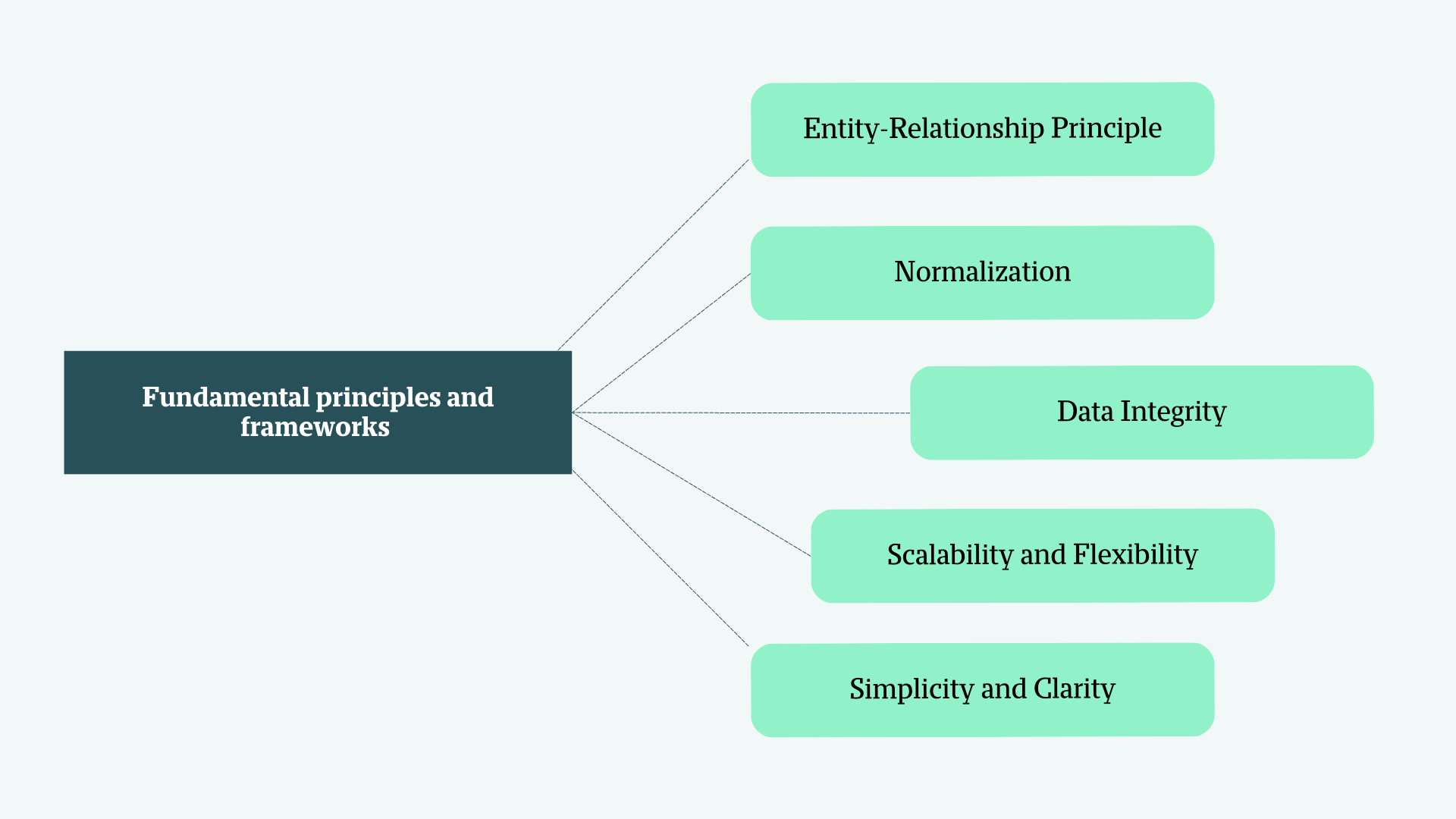

Fundamental principles and frameworks

In data modelling, several fundamental principles and frameworks guide the design of efficient and scalable databases.The Entity-Relationship (ER) model is central to this process, defining how different real-world objects or concepts—referred to as entities—interact with each other. Entities such as beneficiaries, relief items, donors, and distribution centers represent key components of humanitarian systems, and their relationships determine how data flows between them.

Additionally, data modelling involves principles like normalization to reduce redundancy, data integrity to maintain accuracy, and scalability and flexibility to ensure the system adapts to growing and changing needs. Ensuring simplicity and clarity in these models is crucial for usability across both technical and non-technical users.

1. What is the Entity-Relationship principle in data modelling?

Entities represent real-world objects or concepts, so defining how they interact is key in data modelling. In humanitarian work, entities could include beneficiaries, relief items, donors, and distribution centers.

Relationships: Define how these entities interact with each other. For instance, beneficiaries receive relief items or donors fund distribution centers.

Attributes: Characteristics of these entities, such as beneficiary ID, name, age, and location for "Beneficiaries" or item type, quantity, and expiration date for "Relief Items."

2. What is normalization in data modelling?

Normalization is a data modelling process used to organize a database into smaller, related tables. The primary purpose of normalization is to reduce data redundancy and ensure data integrity. By breaking down a large dataset into smaller, more focused tables, it minimizes duplicate data, ensuring consistency across the database. In humanitarian contexts, this is especially critical for maintaining clean, reliable data, such as avoiding multiple entries for the same beneficiaries or tracking distributed resources without overlap.

The normalization process involves dividing a large table into smaller, related tables and establishing clear relationships between them using foreign keys. This ensures that data is stored in an organized manner, making the database more efficient and scalable while ensuring data consistency across multiple entries.

Normalization includes several steps including normal forms:

- 1st Normal Form (1NF): Ensure all fields contain atomic (indivisible) values. E.g., instead of having a single name field, we separate it into first name and last name.

- 2nd Normal Form (2NF): Remove subsets of data that apply to multiple rows and place them in separate tables. E.g., create separate tables for "Locations" linked to "Beneficiaries" by location ID.

- 3rd Normal Form (3NF): Ensure all fields depend only on the primary key. E.g., remove fields that do not directly describe the beneficiary, such as item attributes. E.g., in a beneficiary table you would remove fields related to relief items and place them in a separate table. This would ensure that each field in the beneficiaries table relates to the beneficiary.

3. What is data integrity in data modelling?

Data integrity is a crucial aspect in data modelling because it ensures that data remains accurate, consistent, reliable and complete over time. This is enforced by using constraints like primary keys (unique identifiers for each record) and foreign keys (to maintain relationships between tables) to enforce data integrity.

Validation is essential in this step to enforce rules on data entry. Validation rules ensure that data entered into the system meets predefined criteria, such as valid age ranges, appropriate formats for email addresses, or proper location codes. By implementing validation, the system can prevent invalid data from being stored, further strengthening data integrity.

Example:

- Primary Key: Beneficiary ID in the "Beneficiaries" table, where we are collecting attributes such as name, age, gender location. This means that every beneficiary in this table has a unique ID, ensuring no two records represent the same person. This is essential for maintaining data integrity, as it prevents duplication and confusion between different beneficiaries.

- Foreign Key: Location ID in the "Beneficiaries" table linking to the "Locations" table.

- Validation Rule: Ensure age is within a reasonable range (e.g., 0-20 years)

4. What is scalability and flexibility in data modelling?

In data management, scalability and flexibility are critical considerations, especially in the context of humanitarian efforts. As the number of beneficiaries or relief items grows over time, the system must be able to handle increasing volumes of data efficiently, without requiring significant redesigns. This ensures that humanitarian organizations can scale their operations to meet evolving needs. Scalability refers to the system’s ability to expand in response to growing demands. In a humanitarian context, this means designing data models that can accommodate increasing volumes of data, such as more beneficiaries or additional relief items. Techniques like table partitioning, which divides large datasets into smaller, more manageable pieces, are often employed to improve performance and data management as the system scales.

Flexibility allows the system to adapt to changing requirements, such as the introduction of new types of aid or additional data attributes. By ensuring that the data model can be modified without disrupting existing operations, organizations can remain agile and responsive to the dynamic nature of humanitarian work.

Example:

- Flexible Schema: Allows one to add new attributes to the "Beneficiaries" table (e.g., health status) without affecting existing data structures.

- Scalable Model: A scalable model is built to manage increasing amounts of data efficiently as the system expands. Partitioned tables break large datasets into smaller, easier-to-manage segments, like by geographic region or time period. For example, in ActivityInfo, with Custom Views you can save and reuse your column selection, filtering and sorting on a form’s records. Then, you can quickly switch between different views of your form data, and optionally share the views with other users.This approach allows the system to process data more quickly, as it only needs to retrieve relevant sections instead of the entire dataset, enhancing query performance.

5. What is simplicity and clarity in data modelling?

In data management, simplicity and clarity are essential for ensuring that both technical and non-technical staff can easily understand and work with the system. This is especially important in humanitarian operations, where field workers, who may have limited technical expertise, are often responsible for data entry and management.

Simplicity means designing data models that are straightforward and user-friendly. This allows non-technical staff to interact with the system without confusion, promoting efficiency in data entry, updates, and reporting. Clear documentation should include definitions for all entities, relationships, and attributes within the data model. Well-maintained documentation also improves long-term scalability and adaptability of the system, ensuring it remains useful as needs evolve.

Example:

- Simple Relationships: Clearly defined relationships between tables, such as a straightforward link between "Beneficiaries" and "Relief Items."

- Documentation: Detailed explanations of each entity and attribute, including data types and constraints. Including a data dictionary, a document listing all entities, attributes, and their definitions. Or an ER diagram, which is a visual representation of the entity-relationship model showing entities and their relationships.

Conclusion

In summary, creating an effective data model is crucial for managing complex information systems, particularly in areas like humanitarian and development work. A well-structured model that adheres to core principles and frameworks provides a solid foundation for organizing and analyzing data. This enables organizations to develop systems that are flexible, secure, and efficient, effectively meeting their evolving data needs. Ultimately, a robust data model plays a vital role in ensuring successful information management and informed decision-making.

ActivityInfo is based on the relational database model and can support your data modelling efforts so that you can eliminate data silos not only in your projects but also in your organization as a whole. Do you wish to learn more? Never hesitate to contact us.

Resources and further reading

Ai, P. (2023). Understanding normalization in databases: a crucial step in data organization. https://www.linkedin.com/pulse/understanding-normalization-databases-crucial-step-data-organization-dwnwc/

Relational Databases: Examples, management & Types. (n.d.). Vaia. https://www.vaia.com/en-us/explanations/computer-science/databases/relational-databases/

Shan, J. (2024). Universal Data Modeling Series 3: Conceptual Model 1. Intro to Entity. Medium. https://medium.com/@junshan0/conceptual-model-1-intro-to-entity-b353f405ee5f

Watt, A. (2014). Chapter 8 The Entity Relationship Data Model. Pressbooks. https://opentextbc.ca/dbdesign01/chapter/chapter-8-entity-relationship-model/

What is a Database Model. (n.d.). Lucidchart. https://www.lucidchart.com/pages/database-diagram/database-models

What is data modeling and why is it important. (2024). Zuci Systems. https://www.zucisystems.com/blog/what-is-data-modeling-and-why-is-it-important/